syncing volumes and env files, right now, I'm mounting the volumes and insert the envs in the docker-compose file.from what i understand, in order for the nodes to run the workers, the local image that I'm building from the Dockerfile need to be pushed to some repository (if it's really needed, i would use AWS ECR) for the airflow workers to be able to create the containers from that image.I want to scale the current setup to 3 EC2s (1 master, 2 nodes) that the master would run the webserver, schedule, redis and flower and the workers would run in the nodes.Īfter searching and web and docs, there are a few things that are still not clear to me that I would love to know The docker-compose is used in docker swarm mode ie. metadb is Postgres that is on a separate RDS).

Kubernetes airflow docker install#

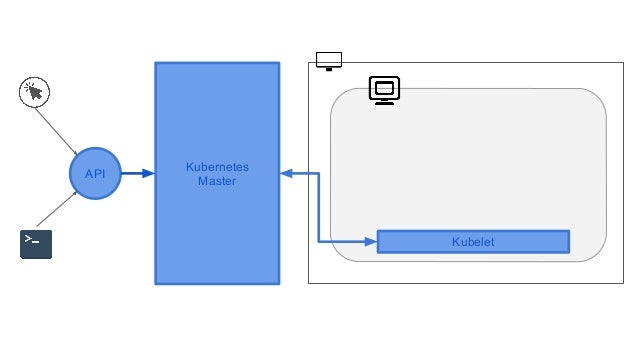

I have a Dockerfile that pulls Airflow's image and pip install -r requirements.txt.įrom this Dockerfile I'm creating a local image and this image is used in the docker-compose.yml that spins up the different services Airflow need (webserver, scheduler, redis, flower and some worker. Right now i have Airflow setup that uses the CeleryExecutor that is running on single EC2. As the title says, i want to setup Airflow that would run on a cluster (1 master, 2 nodes) using Docker swarm.

0 kommentar(er)

0 kommentar(er)